Three Codes of Conduct in AI

Microsoft’s, NLDigital’s and the Code of Europe

Since 2018 several major parties have issued guidelines for reliable AI. These guidelines are designed for the development and implementation of ethics in AI. The hope is that this will keep AI people-driven and minimise risk while maximising the benefits of AI systems. In this blog, we look at 3 of these codes: The Code of Europe, NLDigital (formerly Nederland ICT), and Microsoft. What is the difference between these 3?

European guidelines

In June 2018 the European Commission hired a team of independent experts to create guidelines. These experts made a first version of the guidelines available in December 2018, after which anyone could critique them. The final version was presented in April 2019 (Figure 1).

The guidelines focus on 3 principles that a reliable AI should adhere to:

- Legal - the AI must comply with all laws

- Ethical - the AI must adhere to ethical principles and values

- Solid - the AI must be sound from both a technical and a social perspective.

The guidelines focus on the last two principles (ethical and sound). These guidelines are already partly reflected in existing laws, but this is not enough. Figure 1 also indicates that all seven guidelines are equally important, mutually supportive, and should be evaluated regularly. The guidelines drawn up are:

Human supervision

People must always remain in control of the systems: the systems must have a supportive function and not undermine human autonomy. It is important that users of the system have sufficient knowledge about the subject about which the system makes decisions.

Technical soundness and safety

AI systems must be resilient and secure. To ensure that accidental damage is kept to a minimum, the decisions must be accurate, reliable, and reproducible.

Privacy and data policy

Privacy and data protection must be fully observed. This should include the quality and integrity of the data and access to this data. Before using the dataset, thought should be given to possible biases that may be present in the data.

Transparency

The data, systems, and models must be transparent. AI systems and the decisions they make must also be able to be explained in a way that can be understood by the stakeholder. In addition, people need to know if they are dealing with an AI system and what its capabilities and limits are.

Diversity, non-discrimination, and fairness

Unfair prejudice must be avoided. Examples include the marginalisation of vulnerable groups and the exacerbation of existing prejudice and discrimination. The AI systems must be accessible to everyone, regardless of any possible limitations on the part of the user.

Social and ecological well-being

AI systems must be sustainable and environmentally friendly. They must take into account the environment and all living beings.

Responsibility

Mechanisms should be put in place to create responsibility and accountability for AI systems and their results.

The European Commission itself already provides a list of questions that should make it easier for the designer of the system to create an ethical AI.

NLDigital (formerly Netherland ICT)

In March 2019, Nederland ICT presented an 'Ethical Code for Artificial Intelligence'. It contains guidelines that Nederland ICT had drawn up to work ethically with AI. These guidelines are based on the European guidelines described in section 2.1.1. This makes the Netherlands the first country where the ICT sector has transposed the European guidelines into a code of conduct for companies. This code of conduct will develop further based on feedback from the professional field.

The rules require those member companies of Nederland ICT:

- be aware of the impact that the results of the AI system may have on public values

- clarify when a user is dealing with AI and who has responsibility

- be aware of and transparent about both the possibilities and shortcomings of AI and communicate about them (to minimise bias and increase inclusive representation)

- provide insight into the data or offer possibilities for this

- allowing all those directly involved to see the results of the AI system

- provide users with feedback opportunities and conduct effective monitoring of the application's behaviour

- share knowledge about AI, both within and outside the sector

- provide information within the chain about the systems and underlying uses of the data, data use outside the European Union, technologies used and the learning curve of the system

Microsoft

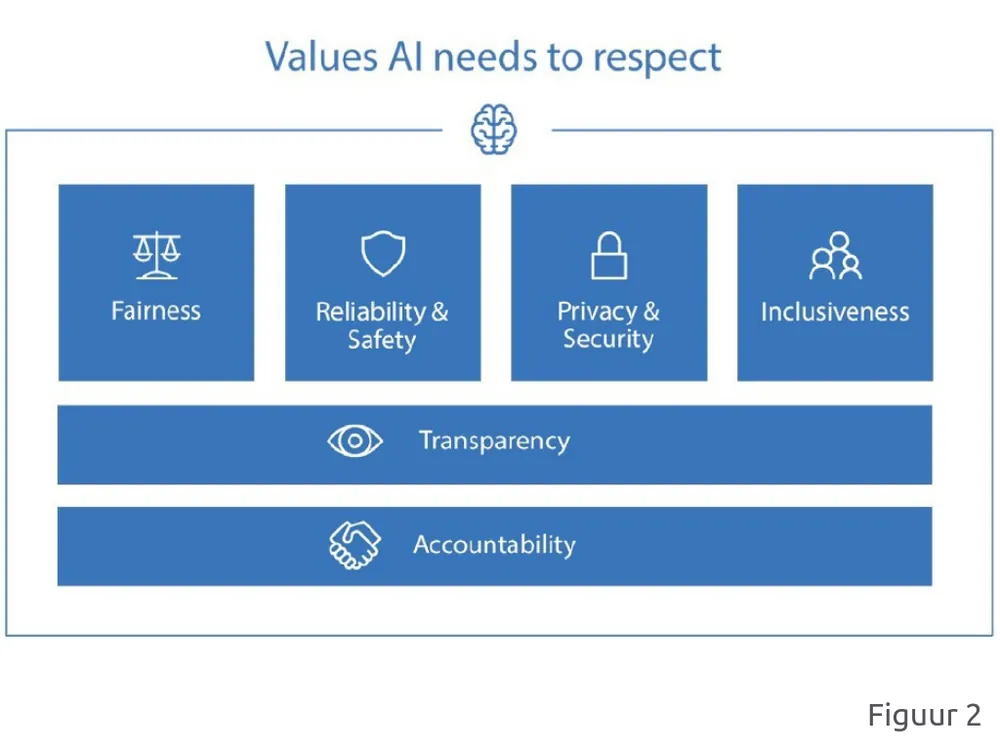

Microsoft has not yet presented individual guidelines, but they have published a book in which they give some principal information about AI that more or less correspond to points that also appear in the European guidelines. The values are shown in Figure 2.

This is about:

Fairness

AI systems must treat everyone equally. If AI systems are well designed, they could make fairer choices than humans because they act more logically. Because humans make the AI systems and might use imperfect data, they could work unfairly. It is therefore important that developers are aware of the ways in which bias can occur in AI systems and what the consequences are.

Training data must be representative of the world or at least the part of the world where the AI system will be used. It is important to take into account the biases that may already be present in the data. It is also important that users of the system know enough about the limits of the system. Finally, it is mentioned that the industry should do its best to create techniques that can detect possible unfairness.

Reliability

AI systems must work reliably and safely. First, it is important to test well and to know under what conditions the system works best and where there is room for improvement. In addition, possible attacks or unintentional interactions will also have to be considered. And people will always have to think carefully about the system and when to use it. Information will have to be shared with customers so that incorrect behaviour can be corrected as quickly as possible.

Microsoft also provides steps here to make AI systems as safe and reliable as possible, such as:

- Systematic evaluation of the quality and applicability of the system.

- Processes for documenting and auditing the systems.

- When AI systems make decisions about people, you need to be able to provide adequate explanations of how the system works, including information about training data, errors during training, and, most importantly, consequences and predictions.

- If AI systems make decisions about people, domain experts must be included in the designing process.

- It is necessary to evaluate when and how an AI system asks for help from a human and how control can be transferred in a proper way.

- There must be a good opportunity for user feedback.

Privacy and security

People will not share information about themselves until they are fully convinced that the data will be properly processed, and since a lot of data is important for AI systems, the data must be stored properly. Not only must the data comply with the European Union's General Data Protection Regulation (GDPR), but other privacy rules must also be applied.

In addition, processes need to be put in place that tracks relevant information about the data (such as when it is retrieved and under what circumstances) and how it is retrieved and used. Access and use must be audited.

Finally, privacy, encryption, separating the data from identifying information, and protecting it from misuse or hacking are also important.

Inclusiveness

AI systems must benefit and empower everyone. The system must be designed so that it understands the context, needs, and experiences of all users.

AI can be powerful in simplifying access to information, education, work, services, and other opportunities easier for people with disabilities through, for example, speech-to-text translation or visual recognition.

Transparency

AI systems must be comprehensible. The preceding 4 values are all essential to achieving transparency. If AI systems contribute to people's important life decisions, it is important that people can understand the basis on which the decisions were made. This might involve giving contextual information about how the system works and interacts with the information. That would make it easier to detect potential biases, errors, and unintended results.

It is not enough to just publish the algorithms (especially in the case of deep neural networks), so it's important to take a more holistic approach, where the designers of the system explain the most important elements as completely and clearly as possible.

Microsoft is working with other organisations to investigate the best way to enable transparent AI. This involves, for example, checking whether algorithms that are easier to understand are a good alternative to more complex ones. More research is needed to create good techniques that ensure greater transparency.

Accountability

The last value is accountability: the people who develop and deploy the AI system are responsible for how the systems work. We can look at how this is already happening in, for example, healthcare and privacy. Internal review boards will have to supervise and monitor the operation.

According to Microsoft, it is important to integrate the above-mentioned six principles into existing activities. Microsoft itself uses its internal AI and Ethics in Engineering and Research (AETHER) committee to keep an eye on this.

Differences between and similarities in the 3 Codes of Conduct

If you look at the different values and guidelines described above, you will see that there are many similarities between the three parties. Examples are the soundness, privacy, transparency, diversity, and responsibility mentioned for each party. However, there are subtle differences. Microsoft, for example, goes into more detail on how to maintain the data policy, while the other parties go into this in less detail.

The European guidelines have a name for something that does not appear in the other guidelines: social and ecological welfare. This is not only about the social impact (which is also reflected in Point 1 of the Nederland ICT rules), but also the environmental impact that AI systems can have.

Nederland ICT, on the other hand, is the only one to explicitly mention the task of sharing knowledge outside the sector and providing education about ICT. Both the European directives and Microsoft mention that it is important to do more research but not that everyone should be trained.

The guidelines can be roughly divided into three groups: fairness/inclusiveness, transparency, and reliability/safety.

Fairness and inclusiveness

The European Commission as well as Nederland ICT and Microsoft all mention fairness and inclusiveness in their guidelines. This means that an AI system must treat everyone equally. It is especially important to ensure that your training data is representative and free from bias.

Of the three parties, Nederland ICT mentions it in the least detail. The guidelines of Nederland ICT are much fewer than those of the other two parties, which makes them very practical and manageable. This may have been a conscious choice that was made. For example, Nederland ICT's rules do not reflect the fact that systems must be accessible to everyone (regardless of possible disabilities). Also, Nederland ICT only briefly mentions countering biases only briefly (in Points 1 and 3), while it is a much bigger part of the rest of the directives.

All in all, it can be said that all three parties agree that it is important to ensure that the AI created will work well and fairly for everyone.

Transparency

Transparency, too, is discussed extensively by all three parties. Transparency means that the system and its creators must be transparent to the users of the system. All three parties agree, for example, that users must always be able to receive feedback as to why a particular decision was taken. Both the European Commission and Microsoft also mention that this can lead to a trade-off between performance and explainability. None of the parties indicates very clearly how far transparency and explainability should go. However, it is clear that with these rules, 'black box algorithms' would no longer be allowed.

And though responsibility is mentioned by everyone, no one seems to discuss it in depth. There is mostly just the mention that it is important to be clear about this. Nederland ICT also explicitly states that these responsibilities must also be clear to the user.

Reliability and safety

Reliability and safety include issues such as privacy, data policy, and monitoring. The system must be well protected against both intentional and unintentional damage and attacks.

While Microsoft and the European Commission both mention that people should always be in control, Nederland ICT is the only organisation not to address this. Microsoft is the only one to mention the importance of including domain experts in the design process. They are the only ones who do not explicitly mention that users should always be informed when AI systems are used in a decision.

More blog posts

-

Exploring the essentials of professional software engineering

Jelle explored what defines a professional software engineer and shared insights from personal experience. Below is a brief recap of the topics he discussed.

Content typeBlog

-

The Software Engineer Oath

This final entry reflects on the full software engineering series, revisiting key topics from code quality to ethics, teamwork, professionalism and the newly proposed Dijkstra’s Oath for responsible engineering.

Content typeBlog

-

The development process Part 2

This blog shows how successful software development relies on people: collaboration, team dynamics, psychological safety and developers actively contributing to product vision, growth and change.Content typeBlog

Stay up to date with our tech updates!

Sign up and receive a biweekly update with the latest knowledge and developments.